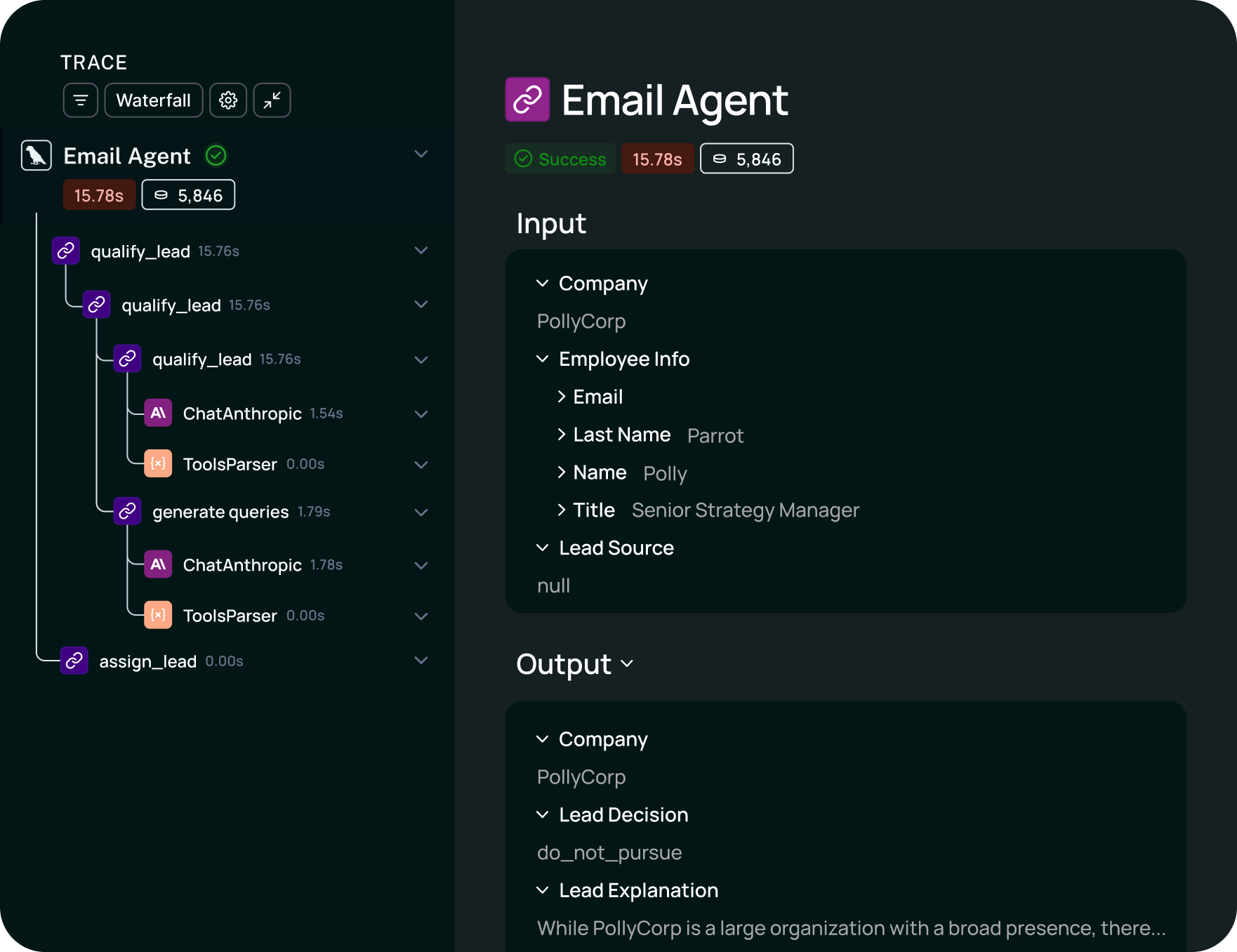

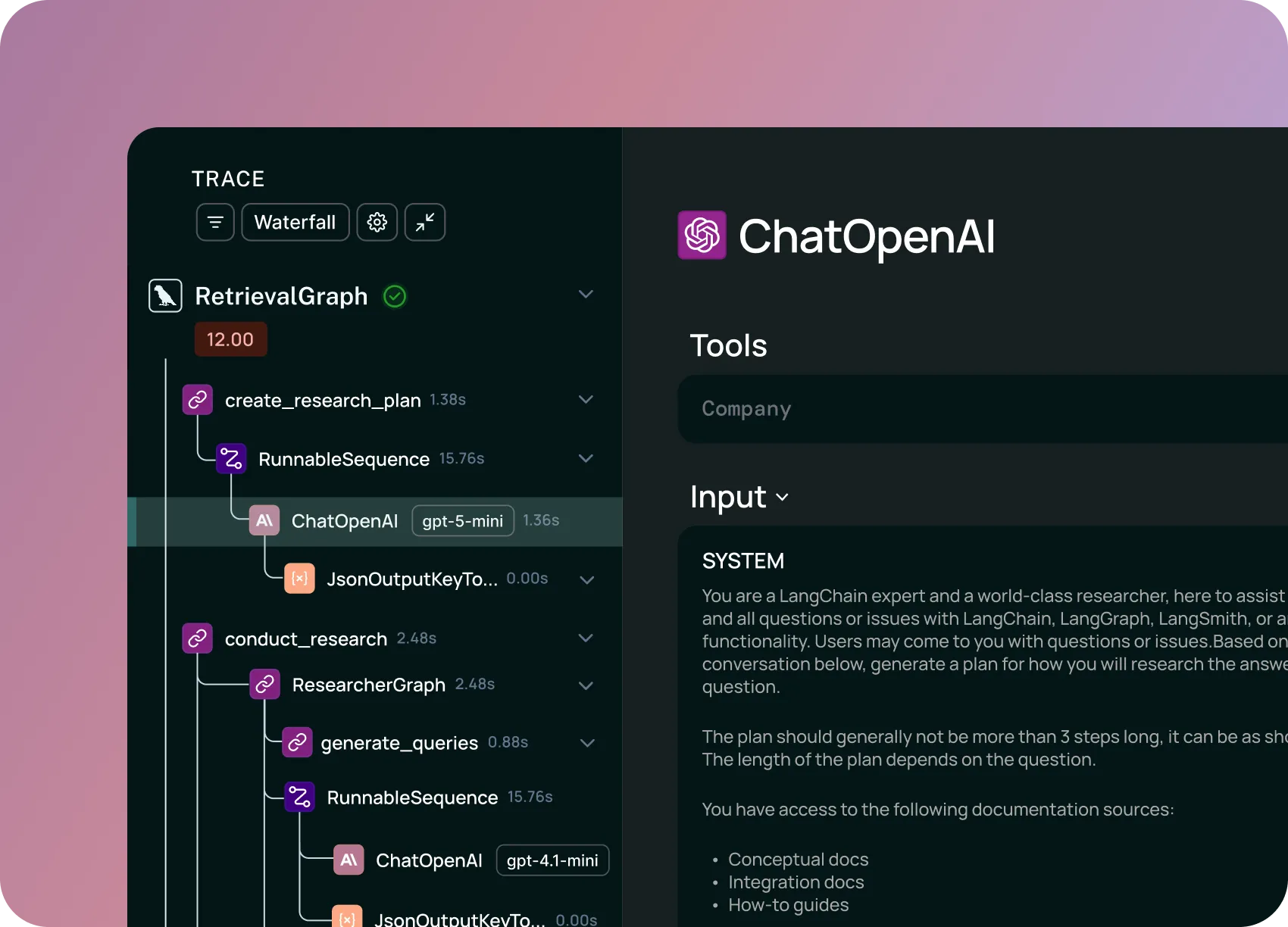

Find failures fast with agent tracing

Quickly debug and understand non-deterministic LLM app behavior with tracing. See what your agent is doing step by step —then fix issues to improve latency and response quality.

LangSmith Observability gives you complete visibility into agent behavior with tracing, real-time monitoring, alerting, and high-level insights into usage.

Helping top teams ship reliable agents

Quickly debug and understand non-deterministic LLM app behavior with tracing. See what your agent is doing step by step —then fix issues to improve latency and response quality.

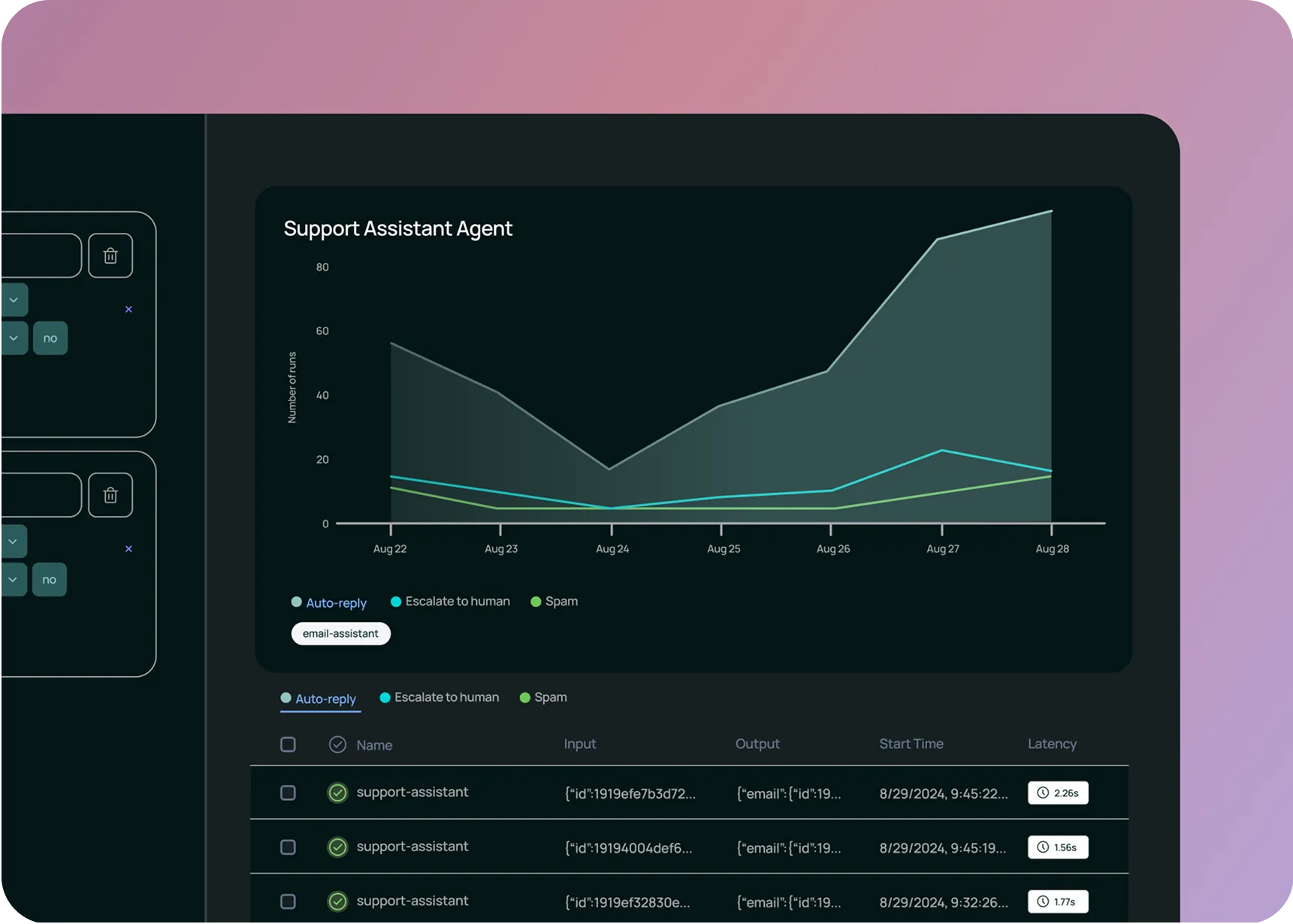

Track business-critical metrics like costs, latency, and response quality with live dashboards. Get alerts when issues happen and drill into the root cause.

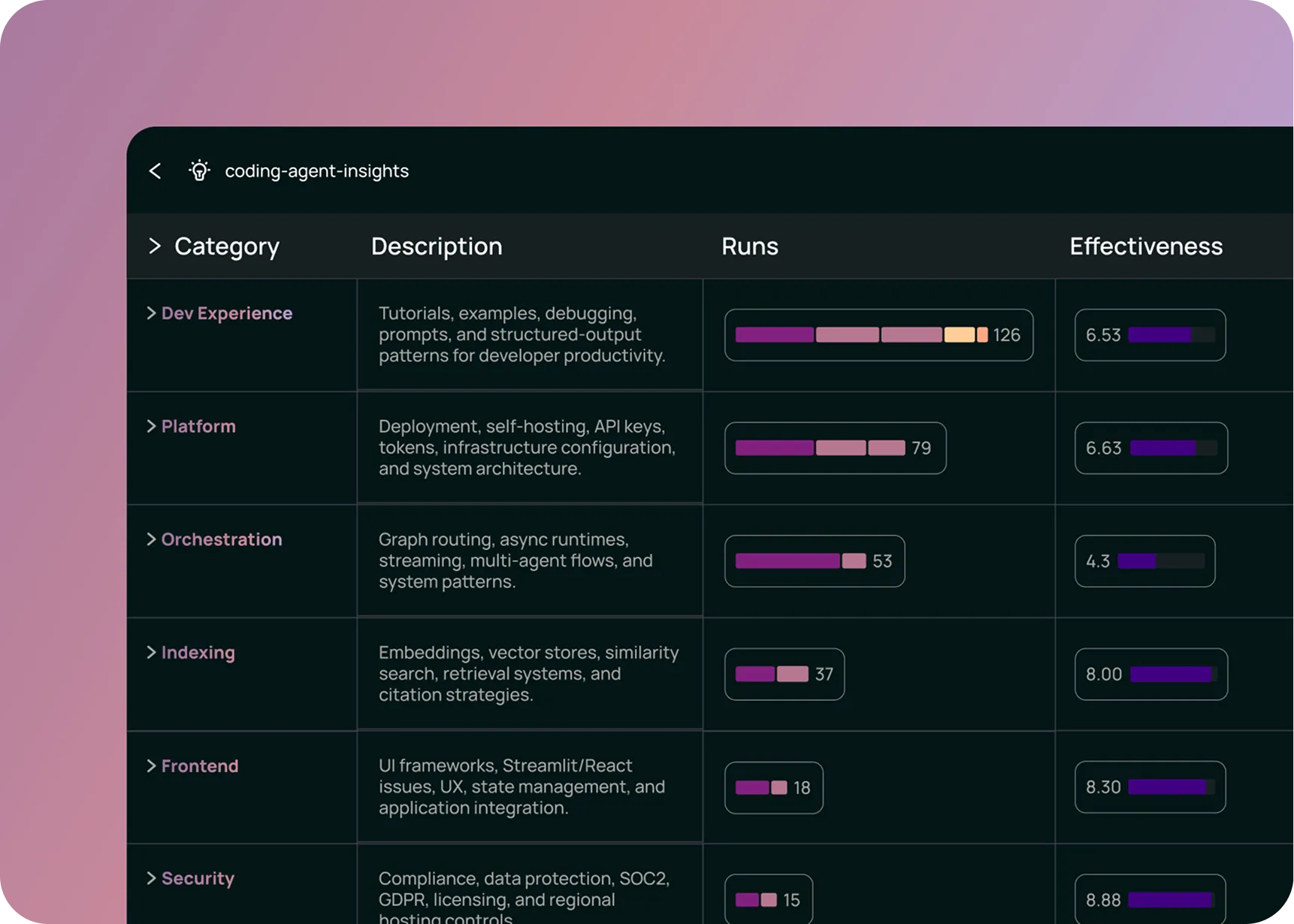

See clusters of similar conversations to understand what users actually want and quickly find all instances of similar problems to address systemic issues.

LangSmith works with any framework. If you’re already using LangChain or LangGraph, just set one environment variable to get started with tracing your AI application.

LangSmith works with any framework. You can use it with or without our open source frameworks LangChain and LangGraph. Learn more.

Yes, LangSmith supports with OTel to unify your observability stack across services. Your application does not need to be written in Python or Typescript. See the docs.

Yes. You can use LangSmith Observability with or without Evaluation. For all plan types, you'll get access to both and only pay for what you use.

Yes, we allow customers to self-host LangSmith on our enterprise plan. We deliver the software to run on your Kubernetes cluster, and data will not leave your environment. For more information, check out our documentation.

When using LangSmith hosted at smith.langchain.com, data is stored in GCP us-central-1. If you’re on the Enterprise plan, we can deliver LangSmith to run on your kubernetes cluster in AWS, GCP, or Azure so that data never leaves your environment. For more information, check out our documentation.

No, LangSmith does not add any latency to your application. In the LangSmith SDK, there’s a callback handler that sends traces to a LangSmith trace collector which runs as an async, distributed process. Additionally, if LangSmith experiences an incident, your application performance will not be disrupted.

We will not train on your data, and you own all rights to your data. See LangSmith Terms of Service for more information.

See our pricing page for more information, and find a plan that works for you.